Apple has published an FAQ document detailing its response to privacy criticisms of its new iCloud Photos feature of scanning for child abuse images.

Apple’s suite of tools meant to protect children has caused mixed reactions from security and privacy experts, with some erroneously choosing to claim that Apple is abandoning its privacy stance. Now Apple has published a rebuttal in the form of a Frequently Asked Questions document.

“At Apple, our goal is to create technology that empowers people and enriches their lives — while helping them stay safe,” says the full document. “We want to protect children from predators who use communication tools to recruit and exploit them, and limit the spread of Child Sexual Abuse Material (CSAM).”

“Since we announced these features, many stakeholders including privacy organizations and child safety organizations have expressed their support of this new solution,” it continues, “and some have reached out with questions.”

The document concentrates on how criticism has conflated two issues that Apple believes are completely separate.

“What are the differences between communication safety in Messages and CSAM detection in iCloud Photos?” it asks. “These two features are not the same and do not use the same technology.”

Apple emphasizes that the new features in Messages are “designed to give parents… additional tools to help protect their children.” Images sent or received via Messages are analyzed on-device “and so [the feature] does not change the privacy assurances of Messages.”

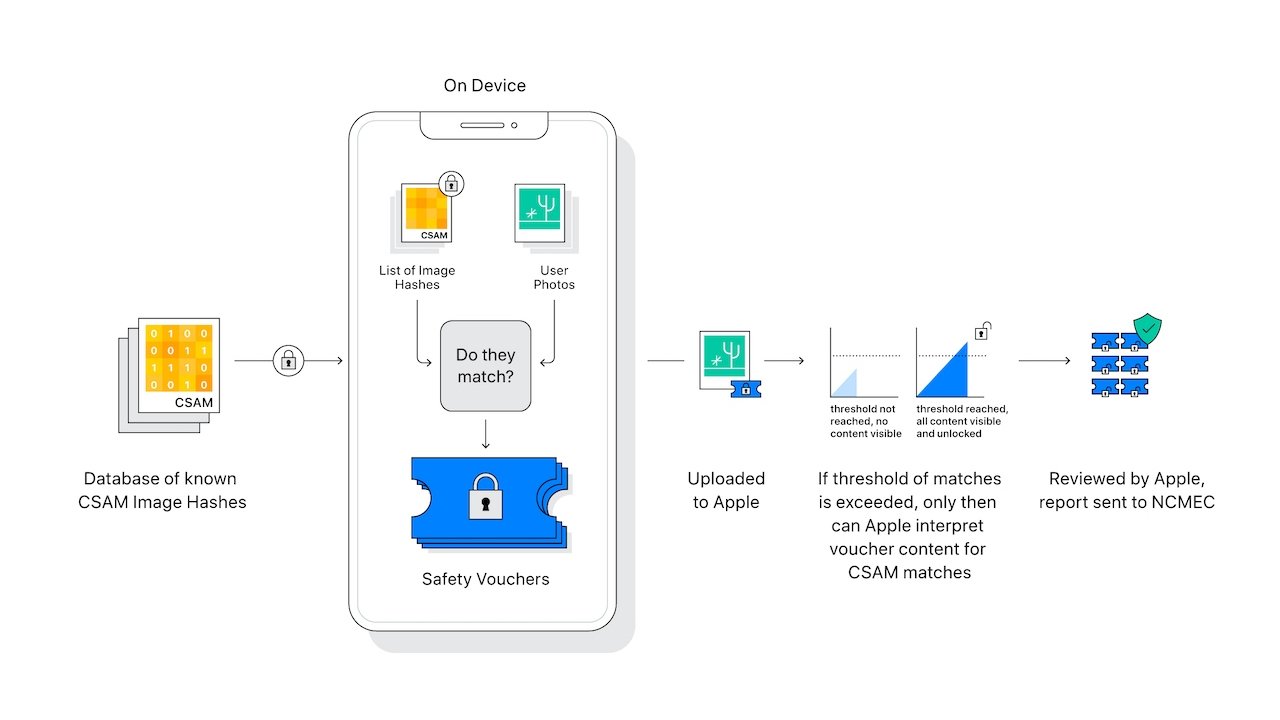

CSAM detection in iCloud Photos does not send information to Apple about “any photos other than those that match known CSAM images.”

A concern from privacy and security experts has been that this scanning of images on device could easily be extended to the benefit of authoritarian governments that demand Apple expand what it searches for.

“Apple will refuse any such demands,” says the FAQ document. “We have faced demands to build and deploy government-mandated changes that degrade the privacy of users before, and have steadfastly refused those demands. We will continue to refuse them in the future.”

“Let us be clear,” it continues, “this technology is limited to detecting CSAM stored in iCloud and we will not accede to any government’s request to expand it.”

Apple’s new publication on the topic comes after an open letter was sent, asking the company to reconsider its new features.

Apple publishes response to criticism of child safety initiatives

Source: Sky Viral Trending

0 Comments